Understanding library impacts on student learning

In the Library with the Lead Pipe is pleased to welcome guest author Derek Rodriguez. Derek serves as a Program Officer with the Triangle Research Libraries Network where he supports collaborative technology initiatives within the consortium and is project manager for the TRLN Endeca Project. He is a Doctoral candidate at the School of Information and Library Science at The University of North Carolina at Chapel Hill, and is the Principal Investigator of the Understanding Library Impacts project.

Value for money in higher education

These are challenging times for colleges and universities. Every week it seems a new article or book is published expressing concerns about college costs,[1] low graduation rates, and what students are learning.[2] We also don’t have to look very hard to find reports computing the economic benefits of a college education to individuals.[3] Clearly, U.S. colleges and universities are under pressure to demonstrate that the value of an undergraduate education is worth its cost.

Graduation rates are important measures. Personal income is certainly a measure that hits home for most of us during these difficult economic times. However, stakeholders in higher education have had their eyes on a different set of metrics for many years: student learning outcomes. A recent example is A Test of Leadership, better known perhaps as the Spellings Commission report in which the U.S. Department of Education raised concerns about the quality of undergraduate student learning. The report called for measuring student learning and releasing “the results of student learning assessments, including value-added measurements that indicate how students’ skills have improved over time.” [4] In recent years, higher education has responded with new tools to assess and communicate student learning such as the Voluntary System of Accountability.[5]

As colleges and universities grapple with this challenge, academic libraries are also seeking ways to communicate their contributions to student learning. The recently revised draft Standards for Libraries in Higher Education from the Association of College and Research Libraries (ACRL) signals the importance of this issue for academic libraries.[6] The first principle in the revised standards, Institutional Effectiveness, states that:

“Libraries define, develop, and measure outcomes that contribute to institutional effectiveness and apply findings for purposes of continuous improvement.”[7]

And an accompanying performance indicator reads:

“Libraries articulate how they contribute to student learning, collect evidence, document successes, share results, and make improvements.”[8]

While libraries have made significant progress in user-oriented evaluation in recent decades, libraries still lack effective methods for demonstrating library contributions to student learning. Unless we develop adequate instruments (and generate compelling evidence) libraries will be left out of important campus conversations.

In this post I review current approaches to this problem and suggest new methods for addressing this challenge. I close by introducing the ‘Understanding Library Impacts’ protocol, a new suite of instruments that I designed to fill this gap in our assessment toolbox.

The challenge of linking library use to student learning

Demonstrating connections between library use and undergraduate student achievement has proven a difficult task through the years. Several authors have suggested outcomes to which academic libraries contribute such as: retention, grade point average, and information literacy outcomes.[9] I review a few of these efforts below.

Retention

Retention is a measure of the percentage of college students who continue in school and do not ‘drop out.’ A handful of studies have investigated relationships between library use and retention. Lloyd and Martha Kramer found a positive relationship between library use and persistence as students who borrowed books from the library dropped out 40% less often than non-borrowers.[10] Elizabeth Mezick explored the impact of library expenditures and staffing levels on retention and found a moderate relationship between expenditures and retention.[11] Several authors report a different ‘library effect’ on retention: holding a job in the library.[12] This finding is supported by evidence that holding a campus job, especially in an organization that supports the academic mission, is related with “higher levels of [student] effort and involvement”[13] in the life of the university and should logically lead to increased retention. Those of us who have worked in academic libraries have probably observed this mechanism at work with students we have known.

However, I believe relying exclusively on this measure is problematic. First, numerous factors influence retention and it can be difficult to isolate library impact on retention without extensive statistical controls. Second, retention is an aggregate student outcome; it is not a student learning outcome. Retention is an important metric in higher education and we should seek connections between library use and this measure, but it does not satisfy our need to know how libraries contribute to student learning.

Grade point average

Several authors have attempted to correlate student use of the library with grade point averages (GPA). Charles Harrell studied many independent variables and found that GPA was not a significant predictor of library use.[14] Jane Hiscock, James Self, and Karin de Jager, among others switched the dependent and independent variables in their studies and found limited positive correlation between library use and GPA.[15] Shun Han Rebekah Wong and T.D. Webb reported on a large-scale study with a sample of over 8,700 students grouped by major and level of study. In sixty-five percent of the groups, they found a positive relationship between use of books and A/V materials borrowed from the library and GPA.[16]

However, GPA-based studies have their problems. As Wong and Webb note, studies that use correlation as a statistical method cannot assure causal relationships between variables; they can only show an association between library use measures and GPA. As the old adage goes, ‘correlation does not imply causation.’ Do students achieve higher GPAs because they are frequent users of the library? Or do students who make better grades tend to use the library more? Without adequate statistical controls it is impossible to conclude library use had an impact on GPA. Also, as noted by Wong and Webb, it can be difficult to gain access to student grades to carry out this type of study.

Information Literacy Outcomes

Information literacy outcomes assessment is the most fully developed approach we have for demonstrating library contributions to undergraduate achievement. Broadly speaking, information literacy skills encompass competencies in locating and evaluating information sources and using information in an ethical manner. Instruction in these skills is a core offering in academic libraries and findings from Project Information Literacy suggest there is still plenty of work to do![17] ACRL has also created a suite of information literacy outcomes[18] to guide the design and evaluation of library instruction programs. Numerous methods have been used to assess information literacy skills including fixed-choice tests, analysis of student work, and rubrics.[19]

It is tempting to rely solely on student achievement of information literacy skills to demonstrate library contributions to student learning. However, a recent review of regional accreditation standards for four-year institutions suggests there is uneven support for doing so. Laura Saunders found three of six regional accreditation agencies specifically name information literacy as a desired outcome and assert the library’s prominent role in information literacy instruction and assessment of related skills. [20] Others rarely use the term “information literacy” in their standards. Instead, competencies such as “evaluating and using information ethically” appear in these standards as general education outcomes to be taught and assessed throughout the college curriculum. In part, I think this reaffirms for us that many in higher education associate information literacy outcomes with general education outcomes such as critical thinking.

While it may be encouraging for information literacy outcomes to be integrated into the college curriculum, I think this poses real difficulties when we attempt to isolate library contributions to these outcomes. If information literacy and critical thinking skills are inter-related, how are we to assess one set of skills, but not the other? Heather Davis thoughtfully explored this issue in her post “Critical Literacy? Information!” finding that these competencies are intricately related and it is extremely difficult to teach (and assess) them independent of one another. If information literacy skills are taught across the curriculum, when, where, and by whom should they be assessed? Where does faculty influence stop and library influence begin?

Information literacy outcomes are integral to undergraduate education, but these are not the only learning outcomes that stakeholders are interested in. And information literacy is not the library’s sole contribution to student learning.

I believe we need to shift course in our assessment practices and tackle ‘head on’ the challenge of connecting library use in all its forms with learning outcomes defined and assessed in courses and programs on college and university campuses. We should also link our efforts to the learning outcomes frameworks used in the broader academic enterprise. Broadening our perspective will provide a better return on our assessment dollar.

Where to begin

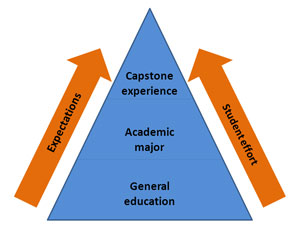

We can improve our ability to detect library impact on important student learning outcomes by carefully choosing our units of observation. Fortunately we can look to the literature of higher education assessment for clues.[21] My conclusion is that a one-size-fits-all approach to assessment is not likely to work for higher education or for library impact. Instead, our instruments should respect differences in students’ experiences. We should focus on the ‘high-impact’ activities in which faculty expect students to demonstrate their best work. Capstone experiences and upper level coursework within the academic major seem to fit the bill for four year institutions.

The academic major

Students majoring in the arts and humanities, the sciences, and the social sciences acquire different bodies of knowledge and learn different analytical techniques. We also know that learning activities, reward structures, and norming influences vary by discipline. This suggests the academic major plays a significant role in shaping expectations for student learning outcomes and the pathways by which they are achieved.[22] Shouldn’t student information behaviors vary by academic major as well? Our assessment tools should be sensitive to these differences.

The capstone experience and upper level coursework

Capstone courses are culminating experiences for undergraduate students in which they complete a project “that integrates and applies what they’ve learned.”[23] I think we should be studying information use during these important times for several reasons. First, there is ample evidence that the time and energy students devote to college is directly related to achieving desired learning outcomes.[24] Students who work hard learn more. Furthermore, students exposed to high-impact practices such as capstone experiences are more likely to engage in higher order, integrative, and reflective thinking activities.[25] Finally, there is strong evidence that student learning is best detected later in the academic career.[26]

If faculty expectations are at their highest and student effort is at its peak during the capstone experience and in upper-level coursework, shouldn’t studying student information behaviors during these times yield valuable data about library impact?

Speaking the language of learning outcomes

Assessing information use during upper-level and capstone coursework in the academic major is only part of the puzzle. We also need to link library use to student learning outcomes that are meaningful to administrators and policy-makers. I’d like to share two frameworks for student learning outcomes which I think hold great promise.

The Essential Learning Outcomes and the VALUE Rubrics

The Association of American Colleges & University’s (AAC&U) Liberal Education and America’s Promise (LEAP) project[27] defined fifteen ‘Essential Learning Outcomes’ needed by 21st century college graduates such as critical and creative thinking, information literacy, inquiry and analysis, written and oral communication, problem solving, quantitative literacy, and teamwork. These outcomes are applicable in all fields and highly valued by potential employers. A companion AAC&U project called VALUE (‘Valid Assessment of Learning in Undergraduate Education’) generated rubrics that describe benchmark, milestone, and capstone performance expectations for each outcome. These rubrics are intended to serve as a “set of common expectations and criteria for [student] performance” to guide authentic assessment of student work and communicate student achievement to stakeholders.[28]

Tuning

Some student learning outcomes are discipline-specific. For instance, one would expect students majoring in chemistry, music, or economics to acquire different skills and competencies. A process called Tuning is intended to generate a common language for communicating these discipline-specific outcomes.[29] First developed as a component of the Bologna Process of higher education reform in Europe,[30] Tuning is a process in which teaching faculty consult with recent graduates and employers to develop common reference points for academic degrees so that student credentials are comparable within and across higher education institutions.[31] Expectations are set for associate, bachelor, and master degree levels. Generic second cycle or bachelor degree level learning expectations as defined by the European Tuning process are noted below. Recent work funded by the Lumina Foundation has replicated this work in three states to test its feasibility in the U.S.[32]

Subject-specific learning expectations for second cycle graduates[33]

- Within a specialized field in the discipline, demonstrates knowledge of current and leading theories, interpretations, methods and techniques;

- Can follow critically and interpret the latest developments in theory and practice in the field;

- Demonstrates competence in the techniques of independent research, and interprets research results at an advanced level;

- Makes an original, though limited, contribution within the canon and appropriate to the practice of a discipline, e.g. thesis, project, performance, composition, exhibit, etc.; and

- Evidences creativity within the various contexts of the discipline.

The VALUE rubrics are currently being evaluated in several studies[34] and colleges and universities have begun using them internally to articulate and assess student learning outcomes.[35] While the Tuning process hasn’t yet ‘taken off’ in the U.S., the Western Association of Schools and Colleges recently announced a new initiative to create a common framework for student learning expectations among its member institutions.[36] As colleges and universities experiment with and adopt these frameworks, we should incorporate them into our library assessment tools.[37]

New tools for generating convincing evidence of library impact

As part of my doctoral research I created the Understanding Library Impacts (ULI) protocol, a new suite of instruments for detecting and communicating library impact on student learning outcomes. The protocol consists of a student survey and a curriculum mapping process for connecting library use to locally defined learning outcomes and the VALUE and Tuning frameworks discussed above. Initially developed using qualitative methods[38] the protocol has been converted to survey form and is undergoing testing during 2011. I illustrate how it works with a few results from a recent study.

A pilot project was conducted during the spring of 2011 with undergraduate history majors at two institutions in the U.S., a liberal arts college and a liberal arts university. Faculty members provided syllabi and rubrics regarding learning objectives associated with researching and writing a research paper in upper-level and capstone history courses. History majors completed the online ULI survey after completing their papers.

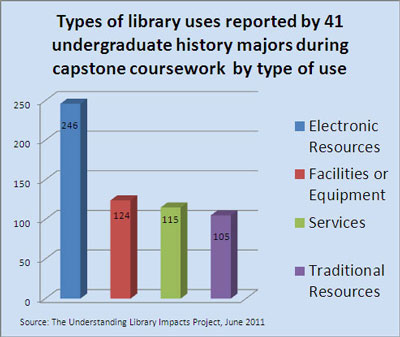

First, students identified the types of library resources, services, and facilities they used during work on their research papers, including:

- Electronic resources, such as the library catalog, e-resources and databases, digitized primary sources, and research guides.

- Traditional resources, such as books, archives, and micro-formats.

- Services, such as reference, instruction, research consultations, and interlibrary loan.

- Facilities and equipment, such as individual and group study space, computers, and printers.

The forty-one students who participated in the pilot project collectively reported 590 types of library use during their capstone projects ranging from e-journals, digitized primary sources, books, archives, research consultations, and study space. Electronic resource use dominated, but traditional resources, services, and facilities made a strong showing.

Students then identified the most important e-resource, traditional resource, service, and library facility for their projects and when each was found most useful. At one study site over 60% of students said library-provided e-resources were important when developing a thesis statement. And 90% of students said both library-provided e-resources and traditional resources were important when gathering evidence to support their thesis. Over 80% of this cohort reported library services were important during the gathering stage. These services included asking reference questions, library instruction, research consultations, and interlibrary loan. These data help link library use to learning outcomes associated with capstone assignments and to the VALUE and Tuning frameworks.

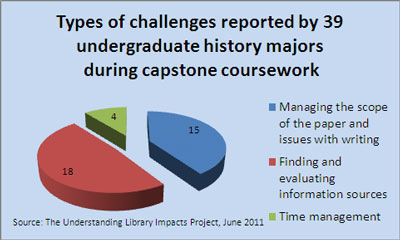

Students reported next on helpful or problematic aspects of library use. For instance, students at both study sites extolled the convenience of electronic resources and the virtues of interlibrary loan, while several complained of inadequate quiet study space and library hours. Information overload and ‘feeling overwhelmed’ were also frequent problems. Time savings and ‘learning about sources for my project’ were mentioned often in regard to library services.

A series of open-ended questions ask about a challenge the students faced during the project. Almost fifty percent of the student-reported challenges were related to finding and evaluating sources and almost as many were related to managing the scope of the paper and issues with writing. Faculty and librarians can ‘drill down’ into these rich comments to understand challenges students face and shape collaboration faculty-librarian collaboration to meet the needs of future student cohorts.

Open ended questions also elicit powerful stories of impact. When asked what she would have done without JSTOR, one student replied:

“I honestly have no idea. I may have been able to get by with just the books I checked out and Google searching, but those databases, JSTOR specifically, really helped me.”

I hope these glimpses of recent pilot study results demonstrate the value of focusing our attention on important and memorable academic activities in students’ lives. Using both quantitative and qualitative methods helps us understand how and why libraries support students when the stakes are highest. Authentic user stories coupled with links between library use and student learning outcomes serve as rich evidence of library impact to support both advocacy efforts and internal improvements.

Future uses

The Understanding Library Impacts protocol is not designed to assess student learning; teaching faculty and assessment professionals fulfill this role. The protocol is intended to link library use with existing assessment frameworks. ULI results can then be used in concert with other assessment data enabling new partnerships with teaching faculty and assessment professionals. For example:

- The AAC&U Essential Learning Outcomes map well to general education outcomes at many colleges and universities. The protocol’s use of the VALUE rubrics creates a natural vehicle for articulating library contributions to these outcomes.

- Understanding Library Impacts results may also integrate with third-party assessment management systems (AMS). As Megan Oakleaf noted in the Value of Academic Libraries Report, integrating library assessment data with AMSs allows the library to aggregate data from multiple assessments gathered across the library and generate reports linking library use to a variety of outcomes important to the parent institution.[39]

It is critical to find ways to connect library use in all its forms with learning outcomes important to faculty, students, and stakeholders. Doing so will bring the library into campus-wide conversations about support for student learning.

Thanks to Ellie Collier, Hilary Davis, and Diane Harvey for their comments and suggestions that helped shape and improve this post. Thanks also to Hilary and Brett Bonfield for their help preparing the post for publication. I also want to thank the librarians, faculty members, and students at the study sites for their support and participation in this pilot study.

1 The costs of attending college continue to outpace standard cost of living indices. From 2000 to 2009, published tuition and fees at public 4-year colleges and universities increased at an annual average rate of 4.9% according to the College Board, exceeding 2.8% annual average increases in the Consumer Price Index over the same period. College Board. Trends in college pricing (2009), http://www.trends-collegeboard.com

2 See for instance, Arum, Richard, and Josipa Roksa. Academically Adrift: Limited Learning on College Campuses. Chicago: University of Chicago Press, 2011.

3 See for instance, Carnevale, Anthony P., Jeff Strohl, and Michelle Melton. What’s it Worth? The Economic Value of College Majors. Georgetown University. Center on Education and the Workforce, 2011, “The New Math: College Return on Investment.” Bloomburg Businessweek, April 7, 2011, http://www.businessweek.com/bschools/special_reports/20110407college_return_on_investment.htm, and “Is College Worth it? College Presidents, Public Assess, Value, Quality and Mission of Higher Education” Pew Research Center, May 16, 2011, http://pewsocialtrends.org/files/2011/05/higher-ed-report.pdf.

4 U.S. Department of Education. A Test of Leadership: Charting the Future of U.S. Higher Education. Washington, D.C., 2006, 24. http://www2.ed.gov/about/bdscomm/list/hiedfuture/reports/final-report.pdf.

5 The Voluntary System of Accountability (VSA) was developed by the National Association of State Universities and Land-Grant Colleges (NASULGC) and the American Association of State Colleges and Universities (NASULGC, 2010a). Created to respond to demands for transparency about student learning outcomes from the Spellings Commission, participating VSA institutions agree to use standard assessments and produce a publicly available College Portrait which provides data in three areas: 1) consumer information, 2) student perceptions, and 3) value-added gains in student learning. See Association of Public and Land-Grant Universities. Voluntary System of Accountability, 2011, http://www.voluntarysystem.org/ and Margaret A. Miller, The Voluntary System of Accountability: Origins and purposes, An interview with George Mehaffy and David Schulenberger. Change July/August (2008): 8-13.

6 American Library Association. Association of College and Research Libraries. Draft Standards for libraries in higher education, 2011. http://www.ala.org/ala/mgrps/divs/acrl/standards/standards_libraries_.pdf

9 See for instance Powell, R.R. “Impact assessment of university libraries: A consideration of issues and research methodologies.” Library and Information Science Research, 14 no. 3 (1992): 245-257 and Joseph R. Matthews, Library Assessment in Higher Education. Westport, Conn.: Libraries Unlimited, 2007.

10 Kramer, Lloyd A. and Martha B. Kramer, The college library and the drop-out. College and Research Libraries 29 no. 4, 310-312, 1968.

11 Mezick, Elizabeth M. “Return on Investment: Libraries and Student Retention.” Journal of Academic Librarianship 33, no. 5 (2007): 561-566.

12 Rushing, Darla & Deborah Poole. ‘‘The Role of the Library in Student Retention,’’ in Making the Grade: Academic Libraries and Student Success, edited by Maurie Caitlin Kelly and Andrea Kross (Chicago: Association of College and Research Libraries, 2002), 91–101; Stanley Wilder, ‘‘Library Jobs and Student Retention,’’ College & Research Libraries News 51 no. 11 (1990): 1035–1038.

13 Aper, J.P. “An investigation of the relationship between student work experience and student outcomes.” Paper presented at the Annual Meeting of the American Educational Research Association (New Orleans, LA, April 1994). ERIC document number, ED375750.

14 Harrell, Charles B. The use of an academic library by university students. Ph.D. dissertation. University of North Texas, 1989.

15 Hiscock, Jane E. “Does library usage affect academic performance? A study of the relationship between academic performance and usage of libraries at the Underdale site of the South Australian College of Advanced Education”. Australian Academic and Research Libraries, 17(4), 207-214, 1986; Self, James. “Reserve readings and student grades: analysis of a case study.” Library and Information Science Research. v. 9 (1), 29-40, 1987; de Jager, Karin. “Impacts & outcomes: searching for the most elusive indicators of academic library performance.” Proceedings of the Northumbria International Conference on Performance Measurement in Libraries and Information Services: “Meaningful Measures for Emerging Realities” (Pittsburgh, Pennsylvania, August 12-16, 2001).

16 Wong, Shun Han Rebekah and T.D. Webb. “Uncovering Meaningful Correlation between Student Academic Performance and Library Material Usage.” College and Research Libraries (in press).

17 Head, Allison. J. & Michael B. Eisenberg. “Finding Context: What Today’s College Students Say about Conducting Research in the Digital Age,” Project Information Literacy Progress Report, The Information School, University of Washington, 2009. http://projectinfolit.org/pdfs/PIL_ProgressReport_2_2009.pdf

18 American Library Association. Association of College and Research Libraries. “Information Literacy Outcomes” American Library Association. Association for College and Research Libraries. “Information Competency Standards for Higher Education,” 2000. http://www.ala.org/ala/mgrps/divs/acrl/standards/informationliteracycompetency.cfm

19 Oakleaf, Megan. “Dangers and Opportunities: A Conceptual Map of Information Literacy Assessment Tools.” portal: Libraries and the Academy, 8 no. 3 (2008): 233-253.

20 Saunders, Laura. “Regional accreditation organizations’ treatment of information literacy: Definitions, outcomes and assessment.” Journal of Academic Librarianship, 33 no. 3 (2007): 317-326, 324.

21 See for instance Ernest T. Pascarella and Patrick T. Terenzini, How College Affects Students: A Third Decade of Research. San Francisco: Jossey-Bass, 2005.

22 See for instance, Chatman, Steve. “Institutional versus academic discipline measures of student experience: A matter of relative validity.” Research & Occasional Paper Series: CSHE.8.07. Berkeley, CA: Center for Studies in Higher Education (2007).

23 Kuh, George D. High-Impact Educational Practices: what are they, who has access to them, and why they matter. Washington, DC: American Association of Colleges and Universities, 2008, p. 11

24 Pace, C. Robert. The undergraduates: A report of their activities and progress in college in the 1980’s Los Angeles, CA: Center for the Study of Evaluation, University of California, Los Angeles, 1990; Pascarella and Terenzini, 2005.

26 See, for instance, Astin, Alexander W. What matters in college? Four critical years revisited. San Francisco: Jossey-Bass, 1993; Pascarella & Terenzini, 2005.

27 Association of American Colleges and Universities, College Learning for the New Century A report from the National Leadership Council for Liberal Education and America’s Promise, Washington, DC: AAC&U, (2007) http://www.aacu.org/leap/index.cfm; Association of American Colleges and Universities. The VALUE rubrics, 2010. http://www.aacu.org/value/rubrics; Rhodes, Terrel, ed. 2010. Assessing Outcomes and Improving Achievement: Tips and Tools for Using Rubrics. Washington, DC: Association of American Colleges and Universities.

28 Rhodes, Terell L. “VALUE: Valid Assessment of Learning in Undergraduate Education.” New Directions in Institutional Research. Assessment supplement 2007, (2008): 59-70, p. 67.

29 Gonzalez, Julia and Robert Wagenaar, eds. Tuning Educational Structures in Europe II. Bilbao, ES: University of Deusto, 2005

30 See Adelman, Clif. The Bologna Process for U.S. eyes: Re-learning Higher Education in the Age of Convergence. Washington, DC: Institute for Higher Education Policy, 2009 for an overview. The Bologna Process refers to an ongoing educational reform initiative in European Higher Education begun in 1999 as a commitment to align higher education on many levels. Clif Adelman writes that the purpose of this initiative is to “bring down educational borders” and to create a “’zone of mutual trust’ that permits recognition of credentials across borders and significant international mobility for their students” (p. viii). A current, yet incomplete, Bologna initiative is the creation of three levels of qualification frameworks for the purpose of assuring students’ college credentials from one country are understandable in another. The Tuning process is the narrowest of the three frameworks focused on specific disciplines. A similar process is underway in Latin America.

32 See for instance, Indiana Commission for Higher Education. Tuning USA Final Report: The 2009 Indiana Pilot, 2010. http://www.in.gov/che/files/Updated_Final_report_for_June_submission.pdf

34 Collaborative for Authentic Assessment and Learning. American Association of Colleges and Universities,http://www.aacu.org/caal/spring2011CAALpilot.cfm and VALUE Rubric Reliability Project. American Association of Colleges and Universities, http://www.aacu.org/value/reliability.cfm

36 Western Association of Schools and Colleges. “WASC Receives $1.5 Million grant from Lumina Foundation”, May 18, 2011, http://www.wascsenior.org/announce/lumina

37 Oakleaf, Megan. The Value of Academic Libraries: A Comprehensive Research Review and Report. Chicago: Association of College and Research Libraries, 2010. http://www.acrl.ala.org/value/

38 Rodriguez, Derek A. “How Digital Library Services Contribute to Undergraduate Learning: An Evaluation of the ‘Understanding Library Impacts’ Protocol”. In Strauch, Katina, Steinle, Kim, Bernhardt, Beth R. and Daniels, Tim, Eds. Proceedings 26th Annual Charleston Conference, Charleston (US). http://eprints.rclis.org/archive/00008576/ (2006); Rodriguez, Derek A. Investigating academic library contributions to undergraduate learning: A field trial of the ‘Understanding Library Impacts’ protocol. (2007). http://www.unc.edu/~darodrig/uli/Rodriguez-ULI-Field-Trial-2007-brief.pdf;

Nice post, Derek!

Thanks Megan,

Derek

I work in a small private career college and this article just hits the nail on the head. These challenges are going to continue to come into the library and unless we are able to effectively communicate with stakeholders we will become what some fear, obsolete.

Great article – thank you for the insight.

Thank you Jennifer,

These are challenging times and there is a lot of interesting work to be done.

Derek

Great post! I’m actually just settling into my Ph.D program in educational leadership at Oklahoma State University (this summer is my third semester), and contemplating a somewhat similar dissertation. I’m planning to email you later today to share notes and ask some other questions, but I did want to raise a question here.

You mention that the capstone and upper-division classes are the best place to assess student learning, citing Astin (who I need to read), and Pascarella & Terenzini (who I have read). My Day Job is Access Services & Distance learning librarian at a small regional public university that serves a largely working class and ‘nontraditional’ population. I would argue that these are the types of students who could be helped the most by increased student learning, and yet, less than half of them graduate or transfer out within 6 years. How does the library best intervene in student learning before they give up, and how do we assess that intervention?

Hi Sarah,

Thank you for your note and great question.

I would approach this first, by getting involved with local initiatives. The library should be engaging with faculty, the student life office, academic advising, coordinators of general ed programs to become an active part of the campuses’ intervention strategy. As you note students can’t benefit from college if they do not return.

Measuring the impact is a different challenge. When approaching a problem like this I recommend that the library adopt metrics already in place within the institution. If the institution has a major initiative underway to increase retention, then the library should seek connections with that measure.

If the institution has general education goals and assessment regimes in place, then I would try to link the library assessment program into those initiatives. In this case, the library needs to be ready to answer the question:

“How did our involvement in the xyz intervention program help achieve the goals of our parent institution?”

This is hard work but staff in institutional research or assessment offices may already be trying to answer these questions. So again, partnering with other units on campus can help bring expertise to the library assessment program. This effort will simultaneously bring the library into conversations about an issue of critical importance to the campus.

I can also recommend a paper presented by Craig Gibson and Christopher Dixon regarding measuring academic library engagement that you may find helpful http://bit.ly/kCwQFC.

Lastly, I am happy to hear about your interest in working on challenges faced by non-traditional students. In one of my pilot studies I found that non-traditional students face unique challenges and value library support tremendously. I’ve implemented a non-traditional student scale in my protocol so I can explore in my dataset the challenges non-traditional students face, differences in their preferences, and ways the library helps them.

I think we need more research on what really matters to this growing population to help us shape library services to meet their needs. This is one of our big opportunities, in my opinion.

Good luck in your work.

Derek

Pingback : Ninth Level Ireland » Blog Archive » Understanding Library Impacts on student learning

This is great, Derek–I like focusing on the way the library works with capstone students, although Sarah raises a good point about first generation college students and non-trad college students. Very interesting!

Thanks for your note Libby,

I’ve been thinking about Sarah’s question over the weekend as well.

So why the capstone experience? The current study deployed the ULI protocol to detect links between library use and student learning outcomes that are highly valued by stakeholders in our 4-year institutions: the broad abilities and discipline-specific competencies expected of college graduates.

As our library assessment resources are finite, the capstone form’ of the ULI protocol focuses on specific ‘high-impact’ experiences — in this case upper-level and capstone coursework — where stakeholder expectations, student effort, and faculty assessment of student work converge. For these reasons, I believe a focus on these experiences provides an excellent opportunity to detect credible and authentic evidence of library contributions to these learning outcomes.

However, the capstone experience is only one of several ‘high-impact’ practices linked to higher levels of retention and engagement as reported by George Kuh, cited in footnote 23 above,

and listed at (see http://www.aacu.org/leap/hip.cfm). Other high-impact practices include first-year experiences, writing intensive courses, undergraduate research, and service learning to name a few.

Many of our campuses engage in ‘high-impact practices’ and run other programs which are intended to support a variety of student outcomes and learning outcomes such as those mentioned by Sarah. It seems to me that focusing on student experiences in these programs should be fruitful for detecting library impact on associated outcomes.

I think the ULI protocol and other tools could be appropriate methods depending on the context and the outcomes in question.

Derek

Pingback : Reading round-up: June – Digitalist

Dear Derek,

Thanks for a throught-provoking post about library instruction and impact.

Question: Are you doing more studies as a follow up to your initial study?

Are you willing to share your new suite of instruments with other institutions?

Hi Susan,

You are welcome. I appreciate your interest and comment.

I am glad you asked about next steps for the protocol. I do intend for the instruments to be of practical use to libraries. I am still working on the details of how that will be done.

I am finishing data collection for the 2011 projects this week and planning new projects for the coming year. Those projects are just taking shape but will likely involve repeat studies in history, extending the protocol to other disciplines, and/or experimenting with ways to link ULI data with assessment results.

Again, thank you for your post.

Derek