#DitchTheSurvey: Expanding Methodological Diversity in LIS Research

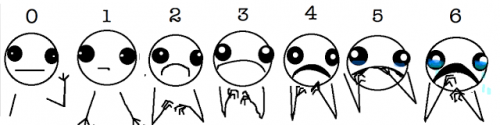

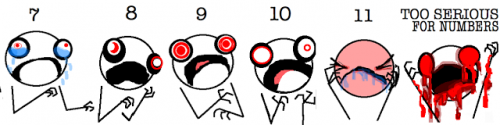

Survey rating scale:

Allie Brosh, Hyperbole and a Half, CC-BY-NC-ND 3.0 United States License. Retrieved from http://hyperboleandahalf.blogspot.com/2010/02/boyfriend-doesnt-have-ebola-probably.html

In Brief: Recent content analyses of LIS literature show that, by far, the most popular data collection method employed by librarians and library researchers is the survey. The authors of this article, all participants in the 2014 Institute for Research Design in Librarianship, recognize that there are sound reasons for using a survey. However, like any one method, its very nature limits the types of questions we can ask. Our profession’s excessive reliance on the survey likewise imposes excessive limitations on what we can know about our field and our users. This article summarizes recent studies of the methods most common to LIS studies, explores more deeply the benefits of using non-survey methods, and offers recommendations for future researchers. In short, this article is a call to arms: it is time to ditch the survey as our primary research method and think outside the checkbox. It is time to fully embrace evidence-based library and information practice and promote training in diverse research techniques.

By Rebecca Halpern, Christopher Eaker, John Jackson, and Daina Bouquin

Introduction

How do we know what we know about libraries and their users? How do we best advocate for our library users and represent their needs in our services? What new theories are waiting to be developed to explain our reality? In order to comprehensively answer these questions, the LIS profession must actively support skills development in research data collection, analysis, and distribution to allow librarians to investigate and address their most pressing issues and advance our field of knowledge. This article highlights the array of research methods available to us, and encourages LIS researchers to expand the methodological diversity in our field.

In particular, over-reliance on the survey method is limiting the types of questions we are asking, and thus, the answers we can obtain. This article is a call to arms: it is time to ditch the survey as our primary research method and think outside the checkbox. It is time to fully embrace evidence-based library and information practice and promote training in diverse research techniques.

Evidence-based library and information practice (EBLIP) is a relatively new concept. If we date its founding to the seminal works of John Eldredge (1997, 2000a,b) and Andrew Booth (2000) about evidence-based librarianship in the medical profession, the concept is not quite 20 years old, and it wasn’t until 2006 that EBLIP had gained enough professional traction to support a journal dedicated to providing a forum for this type of research, evidence Based Library and Information Practice. According to Eldredge, evidence-based practice “employs the best available evidence based upon library science research to arrive at sound decisions about solving practical problems in librarianship” and strives to put “the ‘science’ back in ‘library science’” (2000, p. 290). By using evidence-based practice in our decision-making, librarians can offer demonstrably better collections, services, and spaces.

Evidence in librarianship comprises three spheres of knowledge: professional expertise, local or contextual facts, and professional research (Koufogiannakis, 2011). LIS professionals use this collected evidence to, among many other things, make decisions about accreditation and funding requirements, plan for the future, make decisions about new services or changes to existing services, and decide how to focus outreach efforts. Additionally, accreditation boards, senior university administrators, and boards of directors often require librarians to present assessment data or library-specific metrics. However, librarians are frequently not comfortable designing and administering research studies to obtain the types of data required of them (Kennedy & Brancolini, 2012).

While EBLIP does not privilege the use of any one sphere of knowledge over another, the library and information science profession’s production of evidence is skewed toward expertise and non-research-based narratives, which do not implement rigorous or systematic scientific processes. One study analyzed the contents of 1,880 articles in library and information science journals and found that only 16% “qualified as research” (Turcios, Agarwal & Watkins, 2014). The authors of that study used Peritz’s (1980) definition of research, namely “an inquiry which is carried out, at least to some degree, by a systematic method with the purpose of eliciting some new facts, concepts, or ideas.” Another study found that nearly one-third of all LIS journal literature published in 2005 utilized a survey-based research strategy (Hider & Pymm, 2008).

While surveys can be excellent tools for many questions, and are attractive for LIS professionals because they can reach many people economically, effective surveys require knowledge of survey design and validation, sampling methods, quantitative (and often qualitative) data analysis, and other skills that require formal training many LIS professionals do not possess (Fink, 2013). Without rigorous survey design and validation, data can lead to results that are invalid, misleading, or simply not meaningful to answer the question at hand. Additionally, surveys are not appropriate instruments for all research questions—some may attempt to use surveys in ways that are overly ambitious or not satisfactorily generalizable. Likewise, some surveys may be administered in inappropriate formats or may inadvertently contain “forced-choice” questions (Fink, 2013). Whether the lack of diversity of methodologies in the professional library and information science literature is due to insufficient training in research design, the environment in which we conduct research, or simply a result of “imposter syndrome” (Clance & Imes, 1978), it is clear that librarians need to be better supported in developing research questions, designing methodologically sound studies, and analyzing and publishing our findings.

Literature Review

Booth published the first review of evidence-based librarianship (EBL) in the general library literature in 2003. In it, he examined early development of EBL and its migration from the health sciences to library and information science studies. By 2003, the subfield of EBL was gaining momentum, having already been the subject of two international conferences, but it lacked a commonly agreed upon definition, scope, and terminology (Booth, 2003). To address this situation, Booth outlined the process of evidence-based practice, which comprises:

- focusing/formulating a question

- finding evidence

- filtering results

- appraising the literature

- applying the results in practice, and finally

- evaluating one’s performance.

Most notably, Booth provides a “hierarchy of evidence,” originally created by Jon Eldredge (2000a; 2000b), that illustrates the most effective types of evidence for decision making, with systematic reviews and well-designed randomized controlled trials at the top and opinions, descriptive studies, and reports of expert committees at the bottom. As his review of the research shows, “explicit and rigorous” methods continue to be EBL’s most defining characteristic, the sina qua non of a process that requires valid evidence to improve everyday decision making.

So how much of the professional LIS literature constitutes research? According to a review by Koufogiannakis, Slater, and Crumley (2004) of previous studies published between 1960-2003, the percentage of research articles reported in various LIS journals ranges from 15–57%. Their own evaluation of 2,664 articles published in 2001 found 30.3% could be classified as research. Unfortunately, it is difficult to compare the findings of various studies due to their lack of a consensual definition of what constitutes a research article and their wide variation in sampling and categorizing methods.

Peritz’s 1980 definition of research (quoted above) is an often-used rubric for determining which articles in content analysis studies should be classified as research. Yet despite the common usage of this definition, studies use different parameters for determining which journals to include and, as a result, report sharply divergent answers to the question of how much of the professional LIS literature constitutes research. For example, consider three studies published in 2014: Turcios, Agarwal, and Watkins (2014) examined articles published in 2012-2013 and found 16% qualified as research; Walia and Kuar (2014) examined six top-tier journals published in 2008 and found 56% qualified as research; Tuomaala, Jarveli, and Vakkari (2014) concluded that as much as 70% of 2005 articles qualified as research. Each of these studies cited the same definition of research but, in addition to looking at different publication years, the authors also used dramatically different selection criteria for the journals included in each study, respectively, LIS journals available at the authors’ institution, purposive selection of journals published in the United Kingdom and United States, and purposive selection of periodicals that had been characterized as “core journals” in previous studies.

While the answer to how much of the LIS literature can be classified as research remains uncertain, one finding remains the same across most studies: librarians rely on surveys. A 2008 study by González‐Alcaide, Castelló‐Cogollos, Navarro‐Molina, Aleixandre‐Benavent, and Valderrama‐Zurián that examined the frequency of keywords assigned to articles in the LISA database published between 2004-2005 found that “survey” was the seventh most common descriptor (out of almost 7,000 total descriptors) and was the most common co-occurring descriptor for articles dealing with academic or public libraries. Koufogiannakis, Slater, and Crumley’s (2004) analysis classified approximately 40% of the research articles they examined as descriptive studies, most of which used surveys. After these, the most popular study types were comparative studies, bibliometric studies, content analysis, and program evaluation (only 12 of the 807 research articles used more rigorous methods such as meta-analysis or controlled trials). Hildreth and Aytac (2007) found that LIS researchers use surveys in one-quarter of studies published between 2003-2005. These findings confirm the authors’ earlier study of articles published between 1998-2002, which found that the survey is the most commonly used method in LIS research. Hider and Pymm (2008), Davies (2012), Turcios, Agarwal, and Watkins (2014), Tuomaala, Jarvelin, and Vakkari (2014), and Luo and McKinney (2015) all found similar results: surveys were the most commonly used data collection method (respectively, 30%, 22%-39%, 21%, 27%, and 47.6%). Of particular note, when dividing research articles between those that used one method versus those that used mixed methods, Turcios, Agarwal, and Watkins (2014) found that of the former studies, 49% used surveys.

The one longitudinal study of LIS literature mentioned above, Tuomaala, Jarvelin, and Vakkari (2014), does conclude that the proportion of research articles increased significantly from 1965 to 2005 and, of those, the proportion of empirical research also increased. However, the survey method continues to constitute about one-quarter of all research strategies employed by the articles examined in Toumaala, Jarvelin, and Vakkari’s study. These findings prompt the following questions: Why the reliance on this one particular method? Is it due to its ease of implementation or is there something inherent about LIS research that makes the survey an ideal strategy? Are we, as researcher-practitioners, missing out on key insights into our discipline? And, most importantly, what other methods are at our disposal?

Choosing the Best Methods

Answering the above questions will require more systematic analysis of LIS literature and professional practices. Until we, as a profession, are ready to thoroughly investigate our modus operandi, we offer this series of recommendations for choosing the best methodology and suggest a few alternatives to the survey.

Once you determine the question you seek to answer, ask yourself, “Which method will provide the best answer?” The answer lies partially in the way you ask your research question and, to a lesser extent, the external constraints you have on your research, but ultimately it should depend on which method will provide “sound data.” As Booth (2003) notes, evidence-based librarianship depends upon the use of “explicit and rigorous” methods. The method you choose must match the research question, in both form and function, and professional standards for rigorous research. The following sections briefly explain how to choose the right research method for your research question and detail some of the research methods at your disposal.

Research methods are generally divided into two types, named primarily for the type of data they obtain: qualitative and quantitative. Qualitative data are generally text, sounds, and images, and typically answer questions such as, “What is the meaning of…?” or “What is the experience of…?” If the main objective of your research is to explore or investigate, choose a qualitative method. Qualitative research is defined by its contrast with quantitative research as “research that uses data that do not indicate ordinal values” [emphasis added] (Nkwi, Nyamongo, and Ryan 2001). In contrast, quantitative research is defined as research that uses data that do indicate ordinal values—data that can be ordered and ranked, and can answer questions such as, “how many,”, “how much,” or, “how often?” Examples of quantitative data are numbers, ranks of preferences, or scales, such as degree of agreement or disagreement with a statement. If the main purpose of your research is to compare or to measure, choose a quantitative method.

To determine whether your research will require qualitative or quantitative data, look at your research question. As we noted above, the answer lies partially in the way you ask your question. For example, if you are seeking to answer the question: “What are the experiences of freshman college students in their first-semester literature course?” you may want to use a method that obtains qualitative data, such as an in-depth interview, participant observation, document study, or a focus group. If, on the other hand, you are asking a question that can be answered using ordinal values, such as, “Is student learning improved by a mid-course intervention using library instruction?” you may want to use a method that obtains quantitative data, such as a poll, questionnaire, or statistical analysis of students’ final grades.

Once you decide which type of data will best answer your research question, you need to determine how you will analyze that data. Examples of ways to analyze qualitative data include grounded theory, content analysis, discourse analysis, and narrative analysis. Quantitative data analysis primarily uses statistical approaches to analyze variation in quantitative data (Schutt, 2012). A more detailed discussion of ways to analyze data is beyond the scope of this article. However, we recommend that readers consult the resource list at the end of this article for additional information.

Below are descriptions of several data collection methods, starting with qualitative methods and followed by quantitative methods. Methods directly influence the types of questions that can be asked, and thus the types of knowledge generated, and vice versa. The following section will discuss several methods, their strengths and weaknesses, and the types of answers these methods can provide.

Qualitative Research Methods

Qualitative research methods are most appropriately employed when the research question is one that attempts to investigate, explore, or describe. These methods might examine a library user’s behaviors, experiences, and motivations. Instead of attempting to prove a hypothesis, qualitative methods typically explore an area of inquiry to discover many possible viewpoints. Below, we discuss four qualitative methods that may be useful to LIS research: in-depth interviews, focus groups, vignettes, and participant observations.

In-depth interviews, also called semi-structured interviews, are an effective way to explore the depths and nuances of a topic. They consist mainly of open-ended questions and, though they often follow a script, feel more like a conversation (Guest, Namey and Mitchell, 2013). Since the investigator is speaking in real-time with the research participant, the investigator has the ability to explore topics that arise using follow-up questions (a method called probing), such as asking the participant to explain what they mean by something they just said. In-depth interviews can help investigators gain insight into cultural knowledge, personal experiences, and perceptions (Guest et al, 2013).

In-depth interviews need not always follow the same question-response format. In a 2012 study of academic library architecture by Yi-Chu and Ming-Hsin, the investigators asked students to take photographs of library spaces. The authors then used the photos as a tool during in-depth interviews, a method called “photo-elicitation.” In this way, respondents were able to more clearly describe their preferences for library space, which often required the articulation of abstract ideas, using a unique data gathering process that helped to better answer the question “How do our students prefer to use library space?” While a survey could have been used to gather responses to this question, the ability of participants to photograph and elucidate their opinions provided library staff with a richer understanding of student preferences.

One of the authors of this paper, Eaker, is currently researching the information management practices of civil engineering professionals. He is using the in-depth interview so he can learn not only about how civil engineers manage all the information they use on a daily basis, but also about their experiences with managing information. He wants to know how they perceive the importance of information management skills to their work and is less interested in how often they do one thing or another; thus, the in-depth interview was chosen for depth of understanding over a wide range of experiences instead of a quantitative method such as a survey.

Similar to in-depth interviews, focus groups also allow the investigator to explore depth and nuance, but also have the added benefit of capturing interactions between participants, group dynamics, verbal and physical reactions, and problem-solving processes (Guest et al, 2013). Focus groups are carefully planned discussions on a focused topic, typically with 6–8 participants and 1–2 facilitators. The organic nature of the discussions that occur in a focus group can prompt participants to provide data that relates to the research question in unique ways, due to the influence of group dynamics and social norms, something which may not occur in a one-on-one interview. However, focus groups are at a distinct disadvantage when researching sensitive or confidential topics, as some participants may be hesitant to discuss such topics in the presence of others. In these cases, in-depth interviews may be a more appropriate data collection method.

In a 2003 article published in College & Research Libraries that details the use and effectiveness of focus group, investigators at Brown University Library found that “focus group meetings usually cover a wider range of issues and concerns because even though the [discussion leader] has a script to follow, discussion is driven by participants and deviations from the script are allowed and can lead to more input by participants” (Shoaf, 2003). Interestingly, the investigators of this study (which examined user satisfaction and perceptions of the Brown University Library) also discovered a wide disparity between the findings of the focus groups and the findings of surveys that sought to answer the same research question. The difference was most likely due to the ability to probe participants about their responses to certain questions. As the author notes, through probing “clear patterns of deep concern began to emerge” that were not apparent in survey responses, and indeed, that surveys are not capable of obtaining. Shoaf goes on to note that focus groups have concomitant benefits as well, including as marketing tools and as a way to validate the data of other studies.

Respondents in quantitative studies do not always need to respond to targeted questions. As noted above, visual objects like photographs can be used to inspire conversation. Similarly, a type of verbal picture, called a vignette, can be used to elicit a unique way of thinking. Vignettes, as defined by Finch (1987), are brief stories, usually only a few sentences in length (though more detailed stories can be presented using “developmental vignettes”), that present an often hypothetical situation to which participants are asked to respond. Much like in-depth interviews, these responses can be delivered orally or in writing, may be either highly structured or semi-structured, and often include probing follow-up questions. Vignettes offer two primary advantages: (1) they provide an opportunity to study normative material (how a respondent believes the average person should respond to a given situation rather than how they or someone else would actually respond), which is often difficult to capture using traditional survey methods, in-depth interviews, or focus groups, and (2) they allow the investigator to explore perceptions, attitudes, and beliefs about material that may be sensitive or difficult to define. Most notably, vignettes allow investigators to gain insight into their participants’ interpretive framework and perceptual processes by placing the participants within a situation that encourages them to explicate their social/cultural/group norms in order to respond to the characters in the story.

For example, another author of this paper, Jackson, is currently using vignettes to study how undergraduates perceive and value the threshold concepts outlined in the new ACRL Framework for Information Literacy for Higher Education. As some students in his population may have yet to “cross the threshold,” vignettes provide an avenue for discussing an idea that the participants may not be able to articulate. The data collected from vignettes is usually analyzed qualitatively in order to identify prominent themes.

Finally, participant observation is a method of data collection that, as the name suggests, involves the investigator observing participants in particular situations or contexts. This method is particularly useful when there is the possibility that participants may not be able to describe their experiences accurately or without the influence of social norms and expectations. In its simplest form, participant observation requires investigators to keep a log of observations and then subsequently analyze that log using qualitative analysis (e.g. coding, thematic analysis). Librarians at the Claremont Colleges Library (Fu, Gardinier, & Martin, 2014) utilized participant observations in a unique way by asking students to use campus maps to track their daily treks through campus and, when in the library, used text messaging to observe what students were doing by texting questions to students who agreed to be observed (e.g. where the students were physically located, what they were doing, and who they were with at the moment). In this way, using a relatively low-cost data collection method, librarians were able to discover where students go before and after using the library as well as what they did while they were there . While this is not participant observation in its purest sense, it nonetheless draws upon the same theory: that after-the-fact, self-reported data can be misleading, but on-the-spot, direct observation can give a better sense of actual behavior.

Quantitative Research Methods

As discussed above, quantitative research methods should be used when one seeks to answer questions using ordinal values, such as “how often,” “how many,” or “how important.” Additionally, quantitative methods are typically employed to test a hypothesis or when the range of behaviors or outcomes is already known. Below we describe some common and not-so-common quantitative methods that are useful for LIS research: secondary data analyses; randomized controlled trials; social network analyses; and surveys.

Secondary data analysis “involves the analysis of an existing dataset, which had previously been collected by another researcher, usually for a different research question” (Devine, 2003). There are many advantages to using pre-existing data sets to answer a research question. First, by avoiding the creation of data, a researcher can spend more time analyzing and interpreting results. Similarly, secondary data analysis allows a researcher access to data they may otherwise be unable to collect, such as longitudinal or multi-national data. In the best cases, those data sets will have already been validated and controlled for reliability by the initial researchers. Since validating one’s own data set is time-consuming and requires specialized knowledge of statistical methods and research design, this can be a boon to novice researchers.

Secondary data analysis has been used in LIS research to analyze the impact of libraries on student success and retention (Crawford, 2015). Crawford used data from the Integrated Postsecondary Education Data System and the Academic Library Survey. She collected salient variables from each data set, such as expenditures for FTE, library instruction, student services, and graduation and retention rates. Using a variety of statistical models, she assessed the significance and influence of those variables on each other. She found that both “instruction spending per FTE and student support spending” is highly correlated with student retention. These findings could be used to make informed decisions about budget allocation and how best to focus library resources to improve student retention and academic performance.

Another type of quantitative research method is the randomized controlled trial. Randomized controlled trials are useful when the investigator wants to determine whether or not, and to what extent, an intervention affects an outcome (Oakley, 2004). Randomized controlled trials consist of a minimum of two groups, a control group and an intervention group, to which participants are assigned randomly. For example, to research the effectiveness of a mid-course intervention in a first-year literature class, the investigator could use a randomized controlled trial to divide the class into two sections: one would receive an intervention (e.g. a special tutoring session outside of the regular class) and the other, nothing. The investigator would then compare the two groups’ final grades to determine whether and how much the intervention helped or hindered the students who received it. Shaffer (2011) did something similar using randomized controlled trials to examine learning and confidence levels among students receiving library instruction online versus those receiving in-person library instruction. The findings, which showed that face-to-face students were more satisfied, were statistically significant and thus could more effectively be used to make strategic funding and staffing decisions.

Social network analyses examine networks of human interactions and resource exchange (Haythornthwaite, 1996). In LIS research, social network analyses can be used to understand how students informally share information. By graphing and analyzing participants’ social, professional, and scholastic networks, the researchers can see where information is exchanged freely, where information exchange may be disrupted, or who serves as “hubs” to information exchange (Haythornthwaite, 1996). This type of analysis could, among other possibilities, help librarians to identify effective modes of outreach or to understand peer networks among faculty and students. Currently, Kennedy, Kennedy, & Brancolini, (2015) are using social network analysis to study the sharing of research information among the initial cohort of scholars at the Institute for Research Design in Librarianship. By administering the social network survey to the same group of participants multiple times (the first phase of a longitudinal, multi-cohort exploratory study), they will be able to determine if the participants’ research networks increase in complexity over time.

Finally, we come to the most well known quantitative research method: the survey. As described earlier, surveys are the most frequently used research method in LIS research. Surveys are used when the investigators want to quantify something, i.e., the investigator’s research question is about describing how many, how much, or how often something occurs, which is often the kind of questions we ask. Surveys can measure the impact of services or collections, inform strategies for outreach, or evaluate instructional tools or methods, and are often the best tool to use when a large number of data points are necessary. They are highly structured; the questions and answer options are set in advance. No probing can occur as during in-depth interviews. Surveys are relatively easy to administer because they are often inexpensive to deploy and are less time-consuming to analyze—though mastering survey design is difficult and requires pre-testing for validity, which is why LIS professionals ought to consider using scales, measurements, and surveys that have already undergone rigorous testing.

For example, one of the authors of this article, Halpern, is conducting a study on the efficacy of activity-based instruction in online classrooms in alleviating information anxiety. Halpern is administering Van Kampen’s Multidimensional Library Anxiety Scale (2004) to measure anxiety levels in participants. Instead of drafting her own scale and undergoing the rigorous and time-consuming validation process, she opted to use an existing, already validated scale. Without validation and skilled design, surveys can elicit misleading responses by, for example, “prompting” subjects to answer in a specific way or confounding subjects by including double-negatives in the question wording. They can also lead to results that are not meaningful to the research question, as when subjects misunderstand the question or provide answers that do not address the research question.

We recognize that there are methodologically sound reasons for using a survey, but like any one method, they limit the types of questions that one can ask. Our profession’s excessive reliance on the survey likewise imposes excessive limitations on what we can know about our field and our users. Surveys can provide important insights into questions involving, for example, library patrons’ usage patterns of materials in an institutional repository or how patrons become aware of the repository and the materials in it, but they cannot provide an answer to the question of why people access those materials.

For example, if a team of researchers were interested in measuring the use of an institutional repository for the purpose of improving traffic to the site, they could use a survey to identify how many users were aware of the repository, how many users have accessed the repository in the past year, and the purpose the users provided for that access (chosen from a predetermined list of possible answers). The survey method, however, makes it difficult for the researchers to determine why a user would chose not to access the repository, as the possible explanations are infinitely diverse. While a list of predetermined responses can offer an answer to “what are the most common barriers to access,” even well-researched and pre-tested responses do not allow users to describe these barriers in their own words. By relying on a survey format, the researchers are potentially missing important issues like unique technological needs, diversity of content, and relevancy to user needs.

We believe that our reluctance to answer the question why and our over-reliance on answering the questions how many and how often is crippling our ability to effectively deepen our understanding of the profession and communicate our value. While increases in user satisfaction levels, higher reported grade point averages, and improved reference statistics are certainly important metrics for measuring the effect of library services, by additionally answering why and how the library impacts these areas, we can dig more deeply into these determinants of student success and satisfaction to discover more than mere correlation. True causation may be a grail forever just out of reach given the nature of library services and education in general, but through more diverse qualitative methods, we can begin to see our true value in a clearer light.

Conclusion

As evidence-based practice becomes increasingly critical to the continuation and evolution of our profession, we need to reflect on how we produce knowledge. Our field is ripe for rigorous research, but our over-reliance on the survey is limiting the depth of that knowledge. With the survey method dominating most LIS studies, we strongly recommend that librarians increase the diversity of their methodological toolbox. Determine the methods that will most appropriately answer your research question and even go so far as to seek out questions that can be best answered by less frequently employed practices.

As educators, information experts, and inquisitive professionals, librarians need to be willing to ask tougher questions, ones that can only be answered using unique and often rigorous methods. The authors of this article assert that it is time to ditch the survey as the default method of choice and we encourage our colleagues to think outside the checkbox. We also invite you to continue this discussion on Twitter using the #DitchTheSurvey hashtag.

Additional Resources

We want you to have all the help you need in expanding your repertoire of research methods. The following resources will help you learn more about research methods, research questions, and data analysis.

Arksey, H. & Knight, P. (1999). Interviewing for social scientists: An introductory resource with examples. London: Sage Publications.

A detailed guide to conducting in-depth interviews and focus groups. This book contains instruction on designing a study, interviewing, protecting the rights and welfare of participants, and transcribing and analyzing data.

Connor, E. (Ed.) (2006). Evidence-based librarianship: Case studies and active learning exercises. Oxford: Chandos Publishing.

Intended for practicing librarians, library students, and library educators, this book provides case studies and exercises for developing evidence-based decision-making skills.

Corbin, J., & Strauss, A. (2014). Basics of qualitative research: Techniques and procedures for developing grounded theory, 4th ed. Thousand Oaks, CA: Sage Publications.

The late Anslem Strauss is considered the founder of grounded theory. This book presents methods that enable researchers to analyze, interpret, and make sense of their data, and ultimately build theory from it. Considered a landmark text for qualitative research methods, the book provides definitions, illustrative examples, and guided questions to begin conducting grounded theoretical projects.

Eldredge, J. D. (2004). Inventory of research methods for librarianship and informatics. Journal of the Medical Library Association, 92(1), 83–90.

An annotated listing of research methods used in librarianship. The author provides a definition and a description of each method. Some descriptions include a helpful resource to learn more about the method, and some include citations of articles in which the method was employed.

“EBL 101” Section. Evidence Based Library and Information Practice. Online: http://ejournals.library.ualberta.ca/index.php/EBLIP/

The “EBL 101” section of this journal provides explanations of a different research method in each issue. Each method is defined and described, and includes information about when the method could be used and procedures for conducting a study using the method. It also includes a listing of additional resources for further learning.

Fink, A. (2013). How to conduct surveys: A step by step guide, 5th ed. Thousand Oaks, CA: Sage Publications.

This how-to guides readers through the process of designing surveys and assessing the credibility of others’ surveys. Fink focuses on choosing appropriate surveys, the wording or order of survey questions, choosing survey participants, and interpreting the results.

Guest, G., Namey, E. E., & Mitchell, M. L. (2013). Collecting qualitative data: A field manual for applied research. Thousand Oaks, CA: Sage Publications.

This book explains options for collecting qualitative data, including the more common ones, such as in-depth interviews and focus groups, and the less common ones, such as lists, timelines, and drawings.

Stephen, P. & Hornby, S. (1995). Simple statistics for library and information professionals. London: Library Association Publishing.

Written by two library and information science educators, this book is “written sympathetically” for the library and information professional who is scared of statistics. It provides a simple explanation of the types of statistical methods one may employ when analyzing quantitative data and focuses on what the various statistical tests do and when they should be employed.

Trochim, W. M. K. (2006). Research methods knowledge base. Online: http://www.socialresearchmethods.net/kb/index.php

This resource is a complete social research methods course in a convenient online format. Librarians and information science professionals can work through the course at their own pace, or view specific pages relevant to their interests. It includes basic foundations of research, project design and analysis, and guidance on writing up your results.

Wildemuth, B. (2009). Applications of social research methods to questions in information and library science. Westport, CT: Libraries Unlimited.

This book is intended to fill the gap in traditional library and information science research methods courses, which often rely on textbooks from fields such as sociology and psychology, by providing examples relevant to LIS. Its stated purpose is “to improve [LIS professionals’] ability to conduct effective research studies.” Wildemuth includes examples of effective use of different research methods and applications by prominent LIS researchers.

References

Booth, A. (2000, July). Librarian heal thyself: Evidence-based librarianship, useful, practicable, desirable. In 8th International Congress on Medical Librarianship, 2nd-5th July.

Booth, A. (2003). Bridging the research-practice gap? The role of evidence based librarianship. New Review of Information and Library Research, 9(1), 3-23.

Clance, P. R., & Imes, S. A. (1978). The imposter phenomenon in high achieving women: Dynamics and therapeutic intervention. Psychotherapy: Theory, Research & Practice, 15(3), 241.

Crawford, G. A. (2015). The academic library and student retention and graduation: An exploratory study. Portal : Libraries and the Academy, 15(1), 41-57. doi:10.1353/pla.2015.0003

Davies, K. (2012). Content analysis of research articles in information systems (LIS) journals. Library & Information Research, 36(112), 16-28.

Devine, P. (2003). Secondary data analysis. In Robert L. Miller, & John D. Brewer (Eds.), The A-Z of Social Research. (pp. 286-289). London, England: Sage Publications, Ltd. doi: http://dx.doi.org/10.4135/9780857020024.n97

Eldredge, J. D. (1997). Evidence based librarianship: A commentary for Hypothesis. Hypothesis: The Newsletter of the Research Section of MLA, 11(3), 4-7.

Eldredge, J. D. (2000a). Evidence-based librarianship: An overview. Bulletin of the Medical Library Association, 88(4), 289-302.

Eldredge, J. D. (2000b). Evidence-based librarianship: Searching for the needed EBL evidence. Medical Reference Services Quarterly, 19(3), 1-18.

Finch, J. (1987). The vignette technique in survey research. Sociology, 21(1), 105-114.

Fink, A. (2013). How to conduct surveys: A step-by-step guide. Thousand Oaks, CA: Sage Publications, Inc.

Fu, S., Gardinier, H., & Martin, M. (2014). Ethnographic study of student research frustrations. Research presented at the California Academic & Research Libraries Conference, San Jose, CA.

Guest, G., Namey, E., & Mitchell, M. (2013). Collecting qualitative data: A field manual for applied research. Thousand Oaks, CA: Sage Publications, Inc.

González‐Alcaide, G., Castelló‐Cogollos, L., Navarro‐Molina, C., Aleixandre‐Benavent, R., & Valderrama‐Zurián, J. C. (2008). Library and information science research areas: Analysis of journal articles in Lisa. Journal of the American Society for Information Science and Technology, 59(1), 150-154.

Haythornthwaite, C. (1996). Social network analysis: An approach and technique for the study of information exchange. Library and Information Science Research, 18(4), 323-342. doi:10.1016/S0740-8188(96)90003-1

Hider, P., & Pymm, B. (2008). Empirical research methods reported in high-profile LIS journal literature. Library and Information Science Research, 30(2), 108-114.

Hildreth, C.R. & Aytac, S. (2007). Recent library practitioner research: A methodological analysis and critique. Journal of education for library and information science, 48(3), 236-258.

Kennedy, M., & Brancolini, K. (2012). Academic librarian research: A survey of attitudes, involvement, and perceived capabilities. College & Research Libraries, 73(5), 431-448. doi:10.5860/crl-276

Kennedy, M. R., Kennedy, D.P., & Brancolini, K. (2015). The personal networks of novice librarian researchers. Paper presented at the 7th International Conference on Qualitative and Quantitative Methods in Libraries, Paris, France.

Koufogiannakis, D., Slater, L., & Crumley, E. (2004). A content analysis of librarianship research. Journal of Information Science, 30(3), 227-239.

Luo, L. & McKinney, M. (in press). JAL in the past decade: A comprehensive analysis of academic library research. Journal of Academic Librarianship.

Nkwi, P., Nyamongo, I., & Ryan, G. (2001). Field research into socio-cultural issues: Methodological guidelines. Yaonde, Cameroon. International Center for Applied Social. Sciences, Research and Training/UNFPA.

Oakley, A. (2004). Randomized control trial. In M. Lewis-Beck, A. Bryman, & T. Liao (Eds.), Encyclopedia of social science research methods. (pp. 918-920). Thousand Oaks, CA: Sage Publications, Inc.

Peritz, B.C. (1980). The methods of library science research: Some results from a bibliometric survey. Library Research, 2(3), 251-268.

Schutt, R. (2012). Investigating the social world: The process and practice of research. Thousand Oaks, CA: Sage Publications, Inc.

Shaffer, B. A. (2011). Graduate Student Library Research Skills: Is Online Instruction Effective?. Journal Of Library & Information Services In Distance Learning, 5(1/2), 35-55.

Shoaf, E. C. (2003). Using a professional moderator in library focus group research. College & Research Libraries, 64(2), 124-132.

Tuomaala, O., Järvelin, K., & Vakkari, P. (2014). Evolution of library and information science, 1965–2005: Content analysis of journal articles. Journal of the Association for Information Science and Technology, 65(7), 1446-1462.

Turcios, M. E., Agarwal, N.K., & Watkins, L. (2014). How much of library and information science literature qualifies as research? The Journal of Academic Librarianship, 40(5), 473-479.

Van Kampen, D. J. (2004). Development and validation of the multidimensional library anxiety scale. College & Research Libraries, 65(1), 28–34.

Walia, P. K., & Kaur, M. (2012). Content analysis of journal literature published from UK and USA. Library Philosophy & Practice, 833, 1-17.

Yi-Chu, L., & Ming-Hsin, C. (2012). A study of college students’ preference of servicescape in academic libraries. Journal Of Educational Media & Library Sciences, 49(4), 630-636.

This article would still only be a messy Google Doc if not for the insightful and encouraging revisions from Brett Bonfield and Denise Koufogiannakis; you two helped us craft the article we set out to write while adding a fresh perspective. This article was dreamed up during the 2014 Institute of Research Design in Librarianship, a 2 week-long intensive research seminar and lovefest, led by our mentors Lili Luo, Greg Guest, Marie Kennedy, and Kristine Brancolini. We owe you immense gratitude for helping us become self-described “research methods geeks.” And a huge thank you to our entire IRDL cohort for their continued support, encouragement, and camaraderie (including, of course, our colleague Emily).

A related article just hit publication, which may be why you missed it. Like the Davies article, Chu looked at 3 LIS journals (different ones) but found a growth of non-survey studies. These are journals with publication by faculty at LIS schools than practitioners. I personally was only instructed in survey research in library school, but now as a tenure-track librarian I have had to learn new research methodologies. For example, I recently chose Interview over Survey as a data gathering method, and Content Analysis over quantitative analysis.

Oh, here is the Chu article:

“Research methods in library and information science: A content analysis” Library & Information Science Research, Volume 37, Issue 1, January 2015, Pages 36–41

http://www.sciencedirect.com/science/article/pii/S0740818815000109

Thank you for the comment, John. We’ll check out that article.

Pingback : Latest Library Links 13th March 2015 | Latest Library Links

Pingback : Stuff and things: Recent work | john, from the library

Pingback : Hack Your Summer: Part One | hls

Pingback : In the Library with the Lead Pipe » Editorial: Introductions All Around